New Concurrency APIs in C++ 17 (Parallel Algorithms in STL)

By: Jie

Introduction

To write a multi-threading program, programmers need to call platform APIs or third-party library APIs (e.g., POSIX Thread APIs or Boost Thread APIs) prior to the introduction of Concurrency APIs in C++ 11.

To make the code more portable, C++ started introducing Concurrency APIs in C++ 11, when std::thread became available so programmers can use a uniform API rather than call pthread_create() in POSIX or CreateThread() in Windows to create threads. Mutex, future/async, and condition variables were also introduced, which are necessary low-level APIs to develop a concurrent program.

C++ 14 didn’t do too much in terms of concurrency. It mainly introduced Reader-Writer locks that are still low-level concurrency APIs.

There are 150+ algorithms (e.g., search, sort) in Standard Template Library (STL). They are sequential algorithms. To leverage the power of multiple cores, programmers have to parallelize these libraries using the low-level concurrency APIs aforementioned. It is not an easy task – programmers need to understand some complex strategies such as work-stealing and thread pool.

Now, C++ 17 comes to the rescue. It not only parallelizes almost all STL algorithms but also supports vectorization. This article will show you how to use parallelized/vectorized versions of STL algorithms through a couple of examples.

Prerequisites

I’ll use Clang 10 and Ubuntu 18.04 to compile and run samples through this article. To install clang10:

$ sudo apt update $ sudo apt install clang

Clang relies on TBB (Thread Building Block, a library from Intel) to implement C++ 17 concurrency APIs. To install TBB:

$ sudo apt install libtbb-dev

The following diagram shows the CMakeLists.txt we use:

cmake_minimum_required(VERSION 3.16) project(cbegin) set(CMAKE_CXX_COMPILER "/usr/bin/clang++") set(CMAKE_CXX_STANDARD 17) add_executable(blog main.cpp) target_link_libraries(blog tbb)

command on Line 8 links the program with TBB.

An example of sorting

STL implements a sort algorithm std::sort(). The following program shows how to sort one billion integers using the default sequential std::sort.

#include <iostream>

#include <algorithm>

#include <chrono>

// 1G integers

constexpr long COUNT = 1024*1024*1024;

int main() {

int32_t *data{new int32_t[COUNT]};

typedef std::chrono::high_resolution_clock Clock;

auto t1 = Clock::now();

std::sort(data, data+COUNT);

auto t2 = Clock::now();

auto elapsed = std::chrono::duration_cast<std::chrono::milliseconds>(t2 - t1);

std::cout << elapsed.count() << '\n';

}

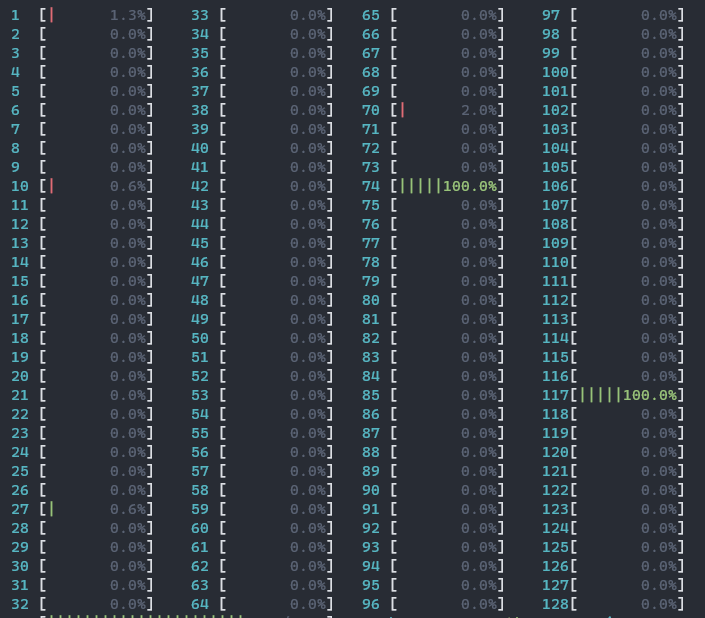

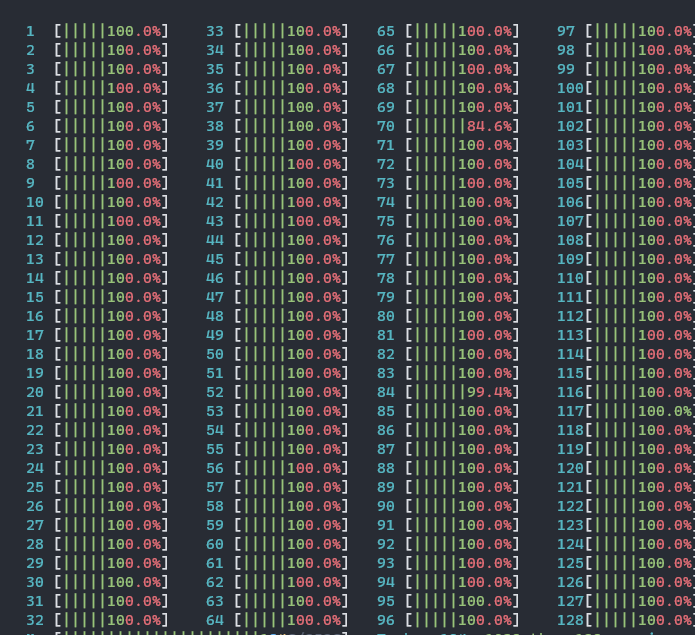

It takes about 178.764 seconds to sort the array on my 128-threads (64-cores) machine. The screenshot shows it uses only one thread (note: there are two busy threads. One of them is being used by another program).

The following code snippet shows a parallelized version of std::sort.

#include <iostream>

#include <algorithm>

#include <execution>

#include <chrono>

// 1G integers

constexpr long COUNT = 1024*1024*1024;

int main() {

int32_t *data{new int32_t[COUNT]};

typedef std::chrono::high_resolution_clock Clock;

auto t1 = Clock::now();

std::sort(std::execution::par, data, data+COUNT);

auto t2 = Clock::now();

auto elapsed = std::chrono::duration_cast<std::chrono::milliseconds>(t2 - t1);

std::cout << elapsed.count() << '\n';

}

It takes only about 3.035 seconds to finish the same job on the same machine. All 128 threads were busy as shown in the screenshot.It takes about 3.035 seconds to finish the job on the same machine. You can tell all 128 threads are busy from the screenshot.

What are the differences between these two versions?

- Line 3 – includes a new header file called “execution” that defines several execution policies. These policies tell std::sort how developers want to run sorting – sequential or parallel.

- Line 15 – uses a new version of std::sort whose first parameter is the execution policy. In our example, we use “std::execution::par” to tell STL to sort integers parallelly.

Execution Policies

C++ 17 offers the execution policies for most of the algorithms:

- sequenced_policy (and the corresponding global object std::execution::seq) – tells STL to execute your algorithm sequential.

- parallel_policy (and the corresponding global object std::execution::par) – tells STL to execute your algorithm in parallel.

- parallel_unsequenced_policy (and the corresponding global object std::execution::par_unseq) – tells STL to execute your algorithm in parallel with also ability to use vector instructions (like SSE, AVX).

The following example illustrates how to execute std::find in parallel.

#include <iostream>

#include <algorithm>

#include <execution>

// 1G integers

constexpr size_t COUNT = 1024*1024*1024;

int main() {

int32_t *data{new int32_t[COUNT]};

auto itr = std::find(std::execution::par, data, data+COUNT, 101);

if (it != data+COUNT)

std::cout << *itr << '\n';

}

Race Condition

For the execution policy of parallel_policy and parallel_unsequenced_policy, STL uses a pool of threads to process data simultaneously. It’s therefore possible to introduce race conditions into your program.

The following example tries to increase a counter after it processes a data item.

#include <iostream>

#include <algorithm>

#include <execution>

#include <chrono>

constexpr size_t COUNT = 8L*1024*1024*1024;

int main() {

int32_t *data1{new int32_t[COUNT]};

int32_t *data2{new int32_t[COUNT]};

int32_t *res{new int32_t[COUNT]};

int64_t counter = 0;

typedef std::chrono::high_resolution_clock Clock;

auto t1 = Clock::now();

std::transform(std::execution::par, data1, data1+COUNT, data2, res, [&counter](int i, int j){

counter++;

return i + j; });

auto t2 = Clock::now();

auto elapsed = std::chrono::duration_cast<std::chrono::milliseconds>(t2 - t1);

std::cout << elapsed.count() << '\n';

std::cout << "counter=" << counter << '\n';

}

Line 12 – defines a counter.

Line 17-19 – the lambda will increase the counter after it finishes the processing of a data item. Since there are multiple threads executing this piece of logic in parallel, they may update the “counter” at the same time.

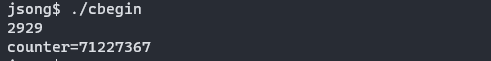

I ran the program on the same machine and got

You can find the counter is much smaller than the correct value 8,589,934,592. Coderrect is working on this, stay tuned for more details soon.

More are coming …

This article quickly goes through the parallel STL algorithms introduced by C++ 17. What’s next?

C++ 20 was approved in September 2020, which introduces more concurrency APIs such as latches, barriers, and coroutines. Our next article will cover its extensions to std::future.